Calculate Time taken by code snippets or a notebook to run in Databricks

To calculate the time taken by code snippets or notebook to run in Databricks. Even one can find the elapsed time of particular code or bunch of code.

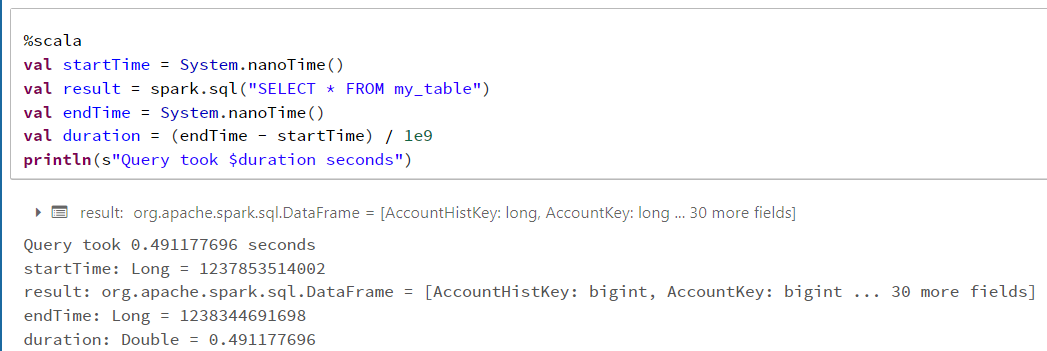

Code in Scala :

To check the how much time a SQL query is taking to run in Databricks.

val startTime = System.nanoTime()

val result = spark.sql("SELECT * FROM my_table")

result.show() /we can remove this if one doesn't want to table result

val endTime = System.nanoTime()

val duration = (endTime - startTime) / 1e9

println(s"Query took $duration seconds")

In above code output the start time is in timestamp format, to convert it into date. For that link is given below : link to convert timestamp into date

Code in Python/Pyspark :

To check the how much time a code snippet is taking to run. Or even one can try it in whole notebook of Databricks.

import time

start_time = time.time()

<put the code here>

end_time = time.time()

elapsed_time = end_time - start_time

print(f'Elapsed time: {elapsed_time}')

so in the above code if someone wants to calculate the time taken to run the whole notebook or time taken to overwrite the data using a notebook, then first we need to import the time module.

then use 'start_time' at the first cell of notebook and 'end_time' at the last cell of notebook and also the rest code at end of notebook.

- You can also use the Spark UI's SQL tab to see the time taken by each query in Spark SQL.

- Keep in mind that the time reported by these methods may not be accurate for very short-running queries or code snippets, and may also be affected by factors such as cluster size and data distribution.

- Another way to check the time taken by the notebook is by using the %%time magic command of Jupyter notebook, which will show the time taken by the entire notebook in seconds.

-- Raman Gupta

Comments

Post a Comment